O4

O4 – From 2008-2013,the research strand for shaping a responsive environment’s media response to inhabitant activity has evolved into a greatly refined and much more powerful software system: the OZONE media choreography framework.

This system allows:

(a) the reading of arbitrary configurations of sensors (including cameras and microphones, but also any array of physical sensors that can be interfaced to a computer through serial inputs);

(b) feature extraction in realtime;

(c) continuous evolution of behavior and orchestration;

(d) mappings to networks of video synthesis computers, realtime sound synthesis computers, theatrical lighting systems, or any electronically or digitally controllable system. (We have controlled for example, household fans and lamps, networks of small commercial toys and LED’s.) In brief the system uses pattern recognition on motion-capture data, to animate and mix the motions of virtual puppets, model-free learning using methods from partial differential equations, and computational physics of lattices and dynamical systems.

LIGHT

[vimeo]https://vimeo.com/88192813[/vimeo]

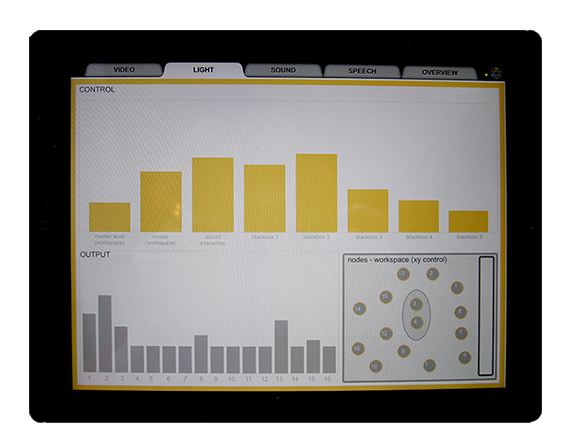

IPAD CONTROL VIEW

SOUND

VIDEO

TECHNIQUE [SOFTWARE]

The Ozone media choreography system factors into the following set of software abstractions: (1) sensor input conditioning, (2) simple statistics, (3) continuous state engine for behavior of the media engines, (4) realtime video re-synthesis instruments, (5) realtime sound re-synthesis instruments, (6) animation interfaces to other protocols, such as DMX, custom LED networks, and actuators. The implementation framework is Max/MSP/Jitter, with substantial extensions to custom computational physics, computer vision, and other methods.

-

Sha Xin Wei, Michael Fortin, Tim Sutton, Navid Navab, “Ozone: Continuous State-based Media Choreography System for Live Performance” ACM Multimedia, October 2010, Firenze.

PEOPLE

Sha Xin Wei, system architecture, experiment design, media choreography

Navid Navab, realtime sound

Julian Stein, realtime lighting

Evan Montpellier, visual programming, state engine

Previous

Harry Smoak, media choreography, lighting, experiment design

Michael Fortin, computational fluid dynamics and video

Morgan Sutherland, state engine, sensor fusion, media choreography, project management

Tyr Umbach, realtime video, state engine

Tim Sutton, realtime sound

Jean-Sebastien Rousseau, realtime video

Delphine Nain, computational fluid dynamics and video

Yon Visell, Emmanuel Thivierge, state engine