Story Telling Space

This research project is an in depth investigation for the realization of a system, able to combine gesture and vocal recognition for interactive art, live events and speech based expressive applications. Practically, we have created a flexible platform for collaborative and improvisatory storytelling combining voice and movement. Our work advances both conceptual and technical research relating speech, body, and performance using digital technologies and interactive media.

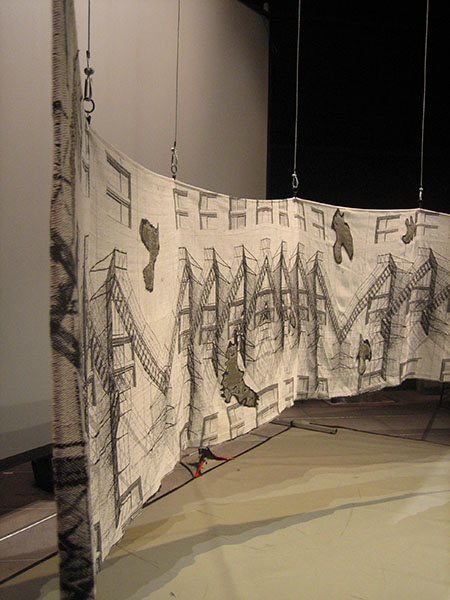

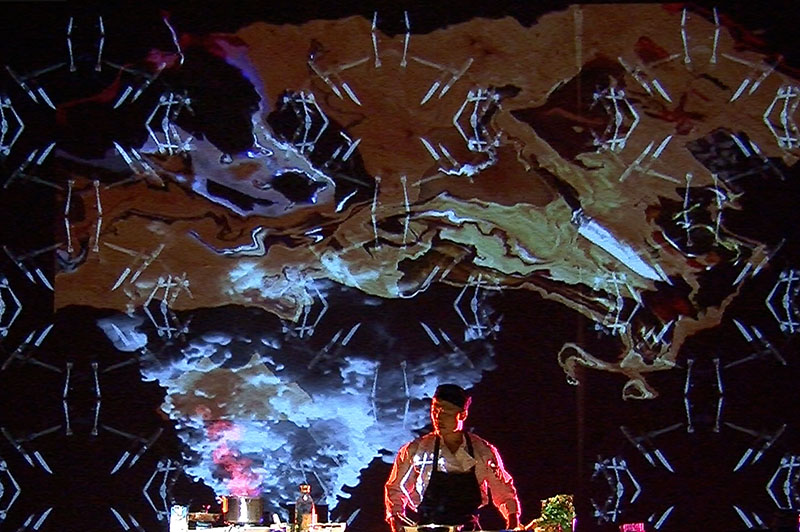

We create an immersive audio-visual Story Telling Room that responds to voice and sound inputs. The challenge is to set up an efficient speech feature extraction mechanism using as good microphone conditions as possible. Leveraging the TML’s realtime media choreography framework, we map speech to a wide variety of media such as animated glyphs, visual graphics, light fields and soundscapes. Our desiderata for mapping speech prosody information centre on reproducibility, maximum sensitivity, and nil latency. Our purpose is not to duplicate but to supplement and augment the experience of the story that is being unfolded with a performer and audience in ad hoc, improvised situations using speech and voice for expressive purposes.

[wpsgallery]

We are exploring the possibilities of Natural Language Processing in the context of live performance. As the performer speaks the system analyzes the spoken words and with the help of the Oxford American Writer’s Thesaurus (OAWT), each semantically significant lexical unit initiates its own semantic cluster.

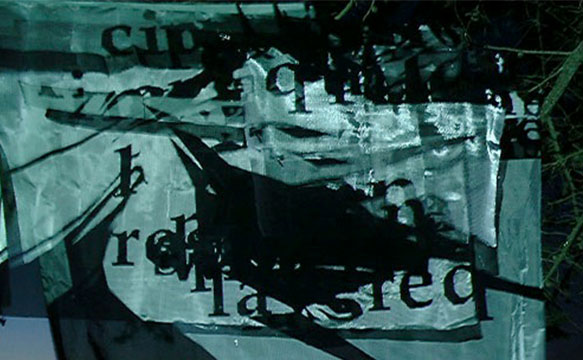

As the story is being unfold by the performer the environment is shifting from a state to another according to the censoring data that occur from the system analysis. Furthermore, we are exploring the possibilities of transcribed text from spoken utterances. The spoken words of the performer already burry a communicative value as they already have attached to them a semantic component that has been evolved and transform through out the history of language. The objective of this prototype is to see what happens when light, imagery and texture is added in the text and how it is perceived by the performer.

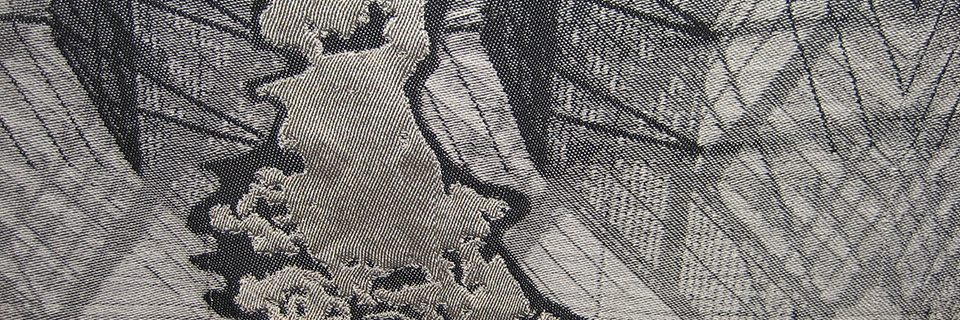

The pieces of text when encountered as audio-visual forms animated by quasi- intelligent dynamics in digital media become widely perceived as animate entities. People tent to regard animated glyphs as things to be tamed or played with rather than a functional and abstract system of communicative symbols.

[youtube]http://www.youtube.com/watch?v=tLPw1WjHoic[/youtube] [youtube]http://www.youtube.com/watch?v=TyF_-m6RWkY[/youtube]

TECHNIQUE

We have create two stand-alone Java application that perform the Speech Recognition and Speech Analysis tasks. For the mapping techniques we have used partially the already existing ozone state engine and we have create a new state engine in MAX/ MSP for better results in the context of improvisatory story telling

COLLABORATORS

Nikolaos Chandolias, real-time Speech Recognition & Analysis, System Design

Jerome DelaPierre, real-time video

Navid Navab real-time sound

Julian Stein, real-time lights

Michael Montanaro, choreographer/director

Patricia Duquet, Actress

Sha Xin Wei, Topological Media Lab

Jason Lewis, Obx Labs

MORE INFO

Story Telling Space Documentation