Tabletap

A performance choreographed around a chef and sonified objects: fruit, vegetables, meat, knives, pots and pans, cutting board and table.

Cooking*, the most ancient art of transmutation, has become over a quarter of a million years an unremarkable, domestic practice. But in this everyday practice, things perish, transform, nourish other things. Enchanting the fibers, meats, wood and metal with sound and painterly light, we stage a performance made from the moves(gestures) of cooking, scripted from the recipes of cuisine both high and humble…

A performance choreographed around a chef and sonified objects: fruit, vegetables, meat, knives, pots and pans, cutting board and table.

[wpsgallery]

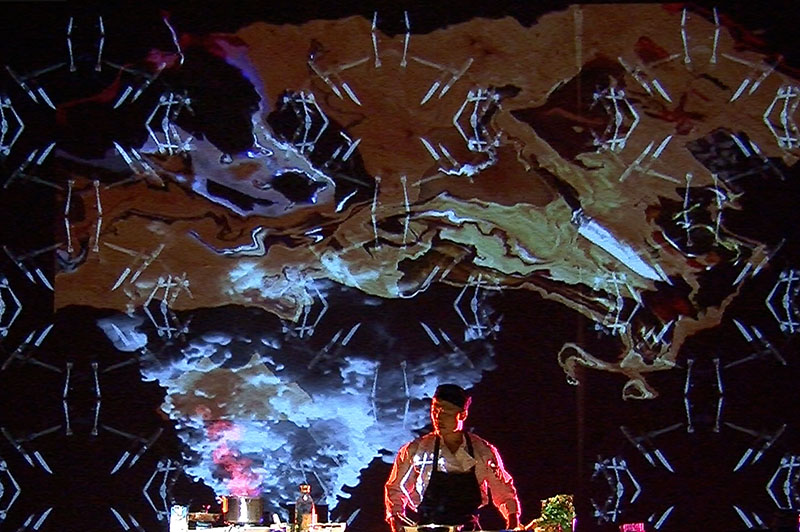

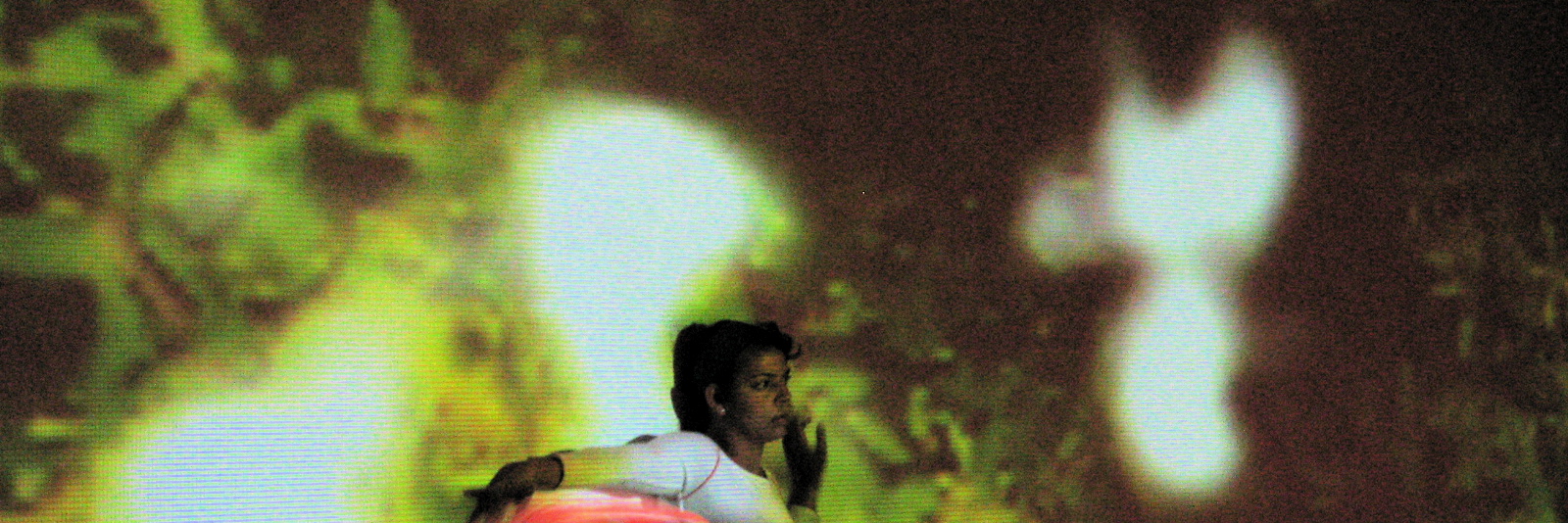

Cooking*, the most ancient art of transmutation, has become over a quarter of a million years an unremarkable, domestic practice. But in this everyday practice, things perish, transform, nourish other things. Enchanting the fibers, meats, wood and metal with sound and painterly light, we stage a performance made from the moves(gestures) of cooking, scripted from the recipes of cuisine both high and humble. Panning features virtuosic chefs who are also movement artists, such as Tony Chong.

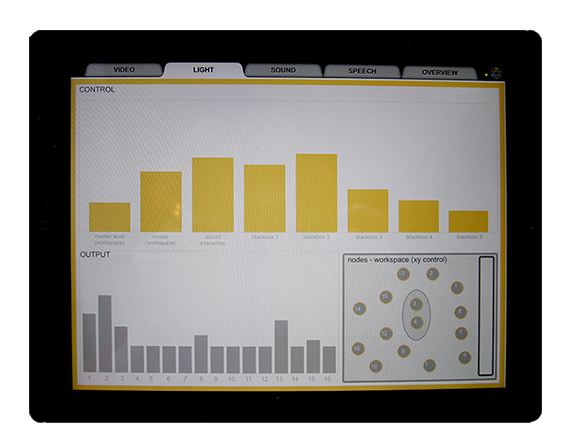

Within our responsive scenography system, every cooking process is transformed into an immersive multimedia environment and performance; A multi-sensory experience composed of scent(smell), light, video, sound, movement, and objects. Every process is experienced across many senses at once. The sizzling sound of hot oil, and the mouthwatering aroma of onion and garlic hits the audience within an audio-visual thunderstorm. At the very end, the audience is invited to taste a sample of the dish within the accumulated sonic environment.

The acoustic state evolves via transmutations of sound, light and image in an amalgam of, not abstract data, but substances like wood, fire, water, earth, smoke, food, and movement. Panning allows the performers modulate these transmutations with their fingers and ears and bodies – the transmutation of movement into sound, chemical reaction into sound, and sound into light and image.

Panning is the first part in a series of performances exploring how everyday gestures/events could become charged with symbolic intensity.

[vimeo]http://vimeo.com/51474504[/vimeo]

[vimeo]http://vimeo.com/42057497[/vimeo]

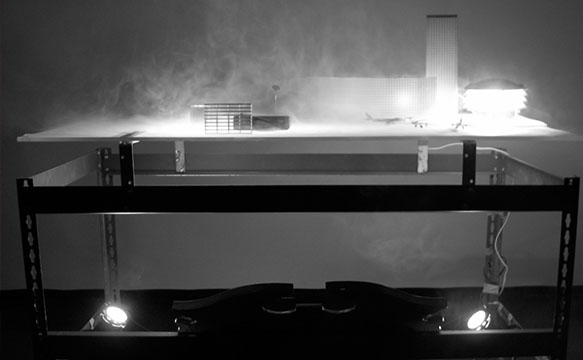

Materials:

Self contained responsive kitchen set embedded into our portable table8.1 speaker system, projectors, food